Back in town after a few days. It's rewarding that the technique is working for others!

- Can you say more about how to use floor to improve the outcomes with NoiseX, and when (as in - when what conditions are present)?

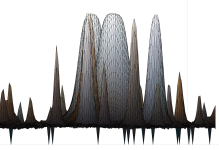

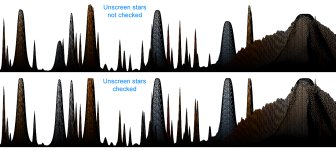

I was surprised at how many operations resulted in pixels of value 0.0. I'll look for an example image, but when 0.0 exists NoiseX will produce surprising deviations all the way to 0.0 from the general noise floor. Please remember that I don't have access to the code or concepts of RC Astro software and am deducing its operation from results. The height-field with X/Y views has shown me when they happen and a process of experimentation led to this discovery. If you're working in 32-bits, four zeros should be removed from the "lowest" variable value.

What leads to the presence of 0.0 pixels is not clear mathematically, but they can happen after LHE in a field with no stars. They arise in saturation increases, and other unexpected operations. As I learn the "why" behind it, I keep Statistics open at all times with Unclipped not checked. I want to see 100% after every operation. If it isn't 100%, I apply

floor. This often brings it back to 100%, but there are times that normalization is part of an operation and gives rise to 1.0 pixels, as well. In that case, I undo

floor and apply

clamp. It could fairly be asked if removing a few 1.0 pixels is critical, and I must answer no. However,

clamp does tell me how many saturated pixels exist, and if I feel there are too many, I repeat the previous convolution starting at a lower offset, continuing until only a few pixels are normalized to 1.0, if any.

- Can you say more about the use of 'recip_E' before BlurX - what is the purpose and what does it do?

The purpose is to give convolutions, particularly BlurX, enough working room to optimize their results. I hope Russell will give us an understanding, but the concept came from my desire to see hue spread evenly across star faces after stretching. I found 64-bits worked better simply because of higher precision, and in the height-field the stars were a bit tighter with more accurate hues. It appeared the BlurX tried to keep all the photon flux that exists pre-convolution in the result. The question arose: what if BlurX started with a lot of headroom? Could it perform its flux-conservation more effectively? It worked.

I had Statistics open during LHE and saw similar excursions, so I applied

recip_E and saw better results in the image. LHE doesn't seem to normalize by default, so a

floor application is often all that's necessary before a second LHE. At the end, you can self-normalize the nebulosity with PixelMath to whatever brightness suits the scene.

- Can you say more about using saturation so early in the workflow? I don't think I've seen that before.

This was very surprising. My blue channel is always the tightest and seemed to [come out] less saturated than the longer wavelengths. Well, all the flux was in a small area, so increased headroom allowed an even saturation increase across wavelength. Here, one must be careful. For the study, I applied saturation as the first step, as it's frequently first in people's workflow (at least on CN). I've found the very best place to apply saturation is after the first BlurX step: Correct Only. Russell mentions using it this way, and in this application, Correct Only brings channel FWHMs in line and corrects poor PSFs. In this condition, saturation is more accurately applied across the undistorted star faces.

I have a family life, so I need to take a break. I'll be back with more discussion and a NoiseX image. Thank you for posting your results! I'm pleased you find the technique works for you.